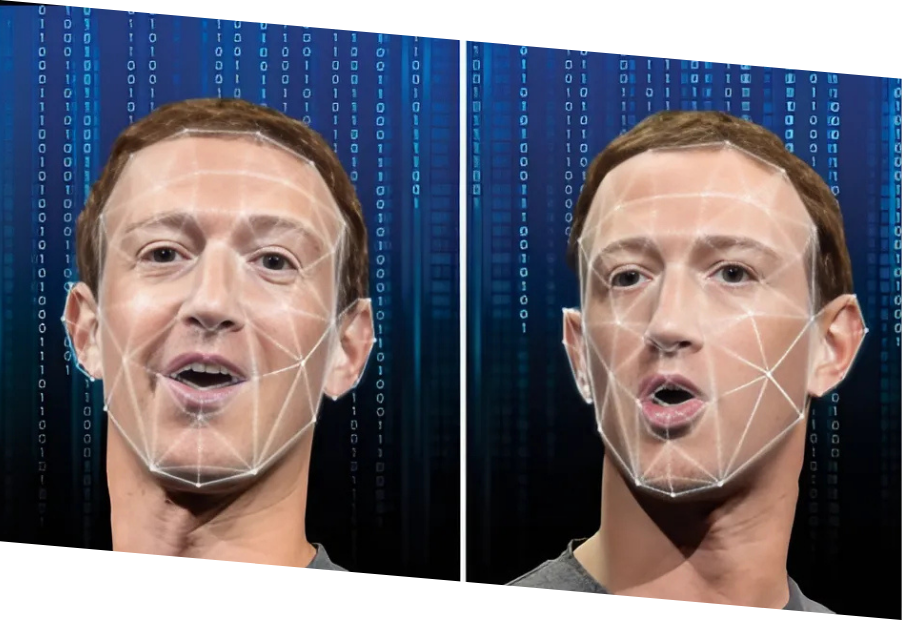

Artificial intelligence (AI) has revolutionised our world, making many processes more efficient and tasks more accessible. However, alongside its many benefits, AI poses significant risks, particularly when it comes to technologies like deepfake.

Deepfakes are what we refer to as highly convincing and realistic alterations to existing media made by AI-driven algorithms which manipulate images, videos, or audio to mimic real people. For example, multiple apps and software can swap faces in videos or pictures, animate or alter images, mimic voices with uncanny accuracy, or even generate entirely fake scenarios that could appear real to the eye, at least at first glance. These creations are powered by machine learning models that analyse vast amounts of data to replicate patterns, voices, and visual cues.

While this technology has incredible potential for creative and educational uses, its dangers cannot be overlooked. Initially seen as a novelty in entertainment to create funny or exclusive content, Deepfake technology has evolved into harmful applications threatening privacy, reputation, legal matters and societal trust.

Misinformation and fake news

One of the most alarming dangers of deepfakes lies in their ability to spread false information by impersonating people in various situations. Fake videos of public figures or regular individuals making inflammatory statements or engaging in inappropriate behaviour or compromising situations can go viral, sowing discord and confusion. The seamless realism of deepfakes, when done well, makes it difficult for audiences to discern fact from fiction, which can affect trust in legitimate sources of information, ruin reputations and job opportunities or even lead to defamation campaigns, political conflict and criminal accusations.

Cyberbullying and harassment

Deepfakes have been weaponised for personal attacks, particularly targeting vulnerable individuals with fake videos or images which can be used for cyberbullying, blackmail, or reputational harm. Victims often struggle to prove that the content is fabricated, due to its realism, leaving them without recourse and deeply affecting their lives.

Financial scams and fraud

AI-driven voice imitation has enabled criminals to impersonate authorities, CEOs, employees, artists or loved ones in phone calls or audio messages, convincing victims to transfer money or divulge sensitive information. Furthermore, they can be used to bypass facial recognition features to access certain devices. These schemes exploit trust and can cause significant financial damage.

Damage of trust in media

As deepfakes become harder to detect, the public may begin to question the authenticity of all media, doubting credible sources which can undermine journalism, scientific evidence, and even personal communications, creating an environment of constant scepticism and conspiracy theories.

Digital skills education is the first line of defence against deepfake threats. Being aware of the existence of such technologies and their capabilities is essential to recognise them and counter their effects. Here are some steps to safeguard against their dangers:

- Verify sources: Always double-check the origin of media content, especially if it evokes a strong emotional reaction. Reputable news outlets and fact-checking services can help verify authenticity.

- Analyse consistency: Ensure that the content, its source and its meta-data, along with the types of behaviours or requests, correspond with other sources or prior content from or about the same person or people being depicted.

- Use AI detection tools: Tools and software are emerging to detect deepfakes, although the technology remains in a constant race with deepfake creators.

- Promote digital literacy: Teaching digital literacy, such as understanding how media is created and manipulated and analysing telltale signs of audio and visual manipulation, helps individuals critically evaluate what they see and hear online.

The DigiCity project equips learners aged 16 to 25 with the skills they need to navigate digital challenges like deepfakes, for example, by displaying their real application and consequences in an engaging and interactive story through a video game and escape game. Through game-based learning, participants develop critical thinking skills to identify and respond to synthetic media.

By fostering awareness and teaching practical strategies, DigiCity empowers a generation of responsible digital citizens who can combat the dangers of AI misuse. Together, we can ensure that the benefits of technology outweigh its risks, building a safer digital world by training informed and capable digital citizens!

Image source: towardsdatascience.com

Further reading:

- Standford University IT. (2024). Dangers of Deepfake: What to watch for. https://uit.stanford.edu/news/dangers-deepfake-what-watch

- DHS Homeland Security. (n.d.). Increasing threat of DeepFake identities. https://www.dhs.gov/sites/default/files/publications/increasing_threats_of_deepfake_identities_0.pdf

- O. Lawal on CyberPhorm. (n.d.). What Is Deepfake and How Dangerous Is it For You and National Security? https://cyberphorm.com/what-is-deepfake-and-why-is-it-dangerous/

- Naik on CoolTechZone. (2022). Deepfake crimes: How Real And Dangerous They Are In 2021? https://cooltechzone.com/research/deepfake-crimes